Augmenting Your Dev Org with Agentic Teams

I thought I was fast. The data disagreed.

I’m 43, and I recently took my pitbike to the track convinced I still had it. The lap times said otherwise. Turns out I’m not the only one with a perception gap.

A 2025 METR study (arXiv:2507.09089) found that experienced developers using AI coding tools actually took 19% longer to complete tasks, while believing they were 20% faster. The gap reveals something important: agentic AI isn’t a plug-and-play productivity boost. It’s a fundamentally different way of working that requires deliberate adoption.

Since ServiceNow Knowledge 25, I have been experimenting with agentic AI both on my own and with clients. What follows is what I’ve found to work, what I’ve broken, and the lessons I’ve learned so you don’t have to.

The Opportunity

By late 2025, industry surveys suggested 80-90% of developers were using AI tools in some capacity (per Stack Overflow’s 2025 Developer Survey and Google’s DORA Report). But most are still on autocomplete. Agentic tools (systems that reason, plan, execute multi-step changes, and iterate on their own) are an entirely different animal.

What we’re talking about is the speed at which a team can experiment and innovate. Complex refactors that used to eat an iteration. Migrations that would’ve taken months. Architectural changes that were “too risky” because nobody had time. Agentic tools make these manageable.

Early adopters report release cycles speeding up significantly in some cases. Fewer bugs because agents enforce standards automatically. For a growing org, this means scaling without ballooning headcount. Your senior team punches above their weight. Agents handle CRUD apps, simple tools, and API glue code at dramatically lower labor costs than a decade ago. Some teams report saving several hours per developer per week. For a 50-person team, that’s meaningful.

But remember: when you automate a broken system, you’re only perpetuating the pain inherent in that system. The productivity can be achieved, but only when the workflow is dialed in first.

For the Executive

If you’re in the C-suite, here is the BLUF: agentic teams should augment your organization to speed innovation and time-to-value. They should not replace actual humans.

The companies getting this wrong are using AI headcount math: “If agents can do 30% of the work, we need 30% fewer developers.” That’s a mistake. The companies getting it right are using AI leverage math: “If agents handle the repetitive work, our developers can ship features that were previously out of reach.”

Junior devs (yes, we still need junior devs) onboard faster with AI as a supervisor. Automated deployments minimize downtime. Predictive maintenance catches issues before production. The outcome you should expect: more predictable quality, fewer post-release fires, lower maintenance bills, and a team that can take on more ambitious projects.

The question to ask your CTO isn’t “how many heads can we cut?” It’s “what could we build that we couldn’t before?”

The question to ask your CTO isn’t “how many heads can we cut?” It’s “what could we build that we couldn’t before?”

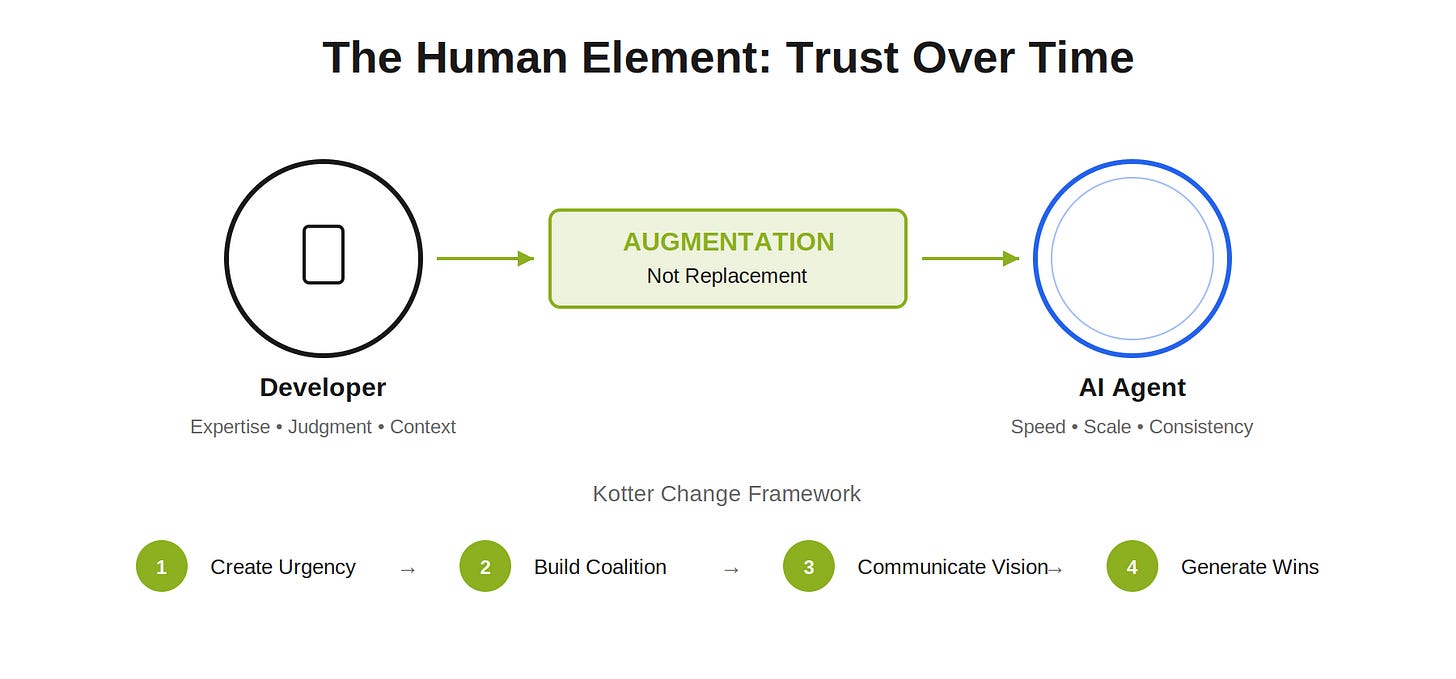

The Human Element

The biggest challenge to agentic AI adoption, same as with any change, is people. Specifically, the fear of being replaced.

Your senior developers have spent years building expertise. When you introduce a tool that can “write code,” their first thought isn’t “great, I’ll be more productive.” It’s “am I about to be automated out of a job?” That fear is rational, and ignoring it guarantees failed adoption.

The only solution is trust, and trust is built over time. This isn’t a technology rollout; it’s a change management exercise. Kotter’s framework applies: create urgency around the opportunity (not the threat), build a coalition of early adopters who can demonstrate success, communicate relentlessly that the goal is augmentation not replacement, and generate visible short-term wins that benefit the team.

The teams that succeed are the ones where developers see agents as tools that make their work better, not threats that make their work disappear.

How to Think About Tools

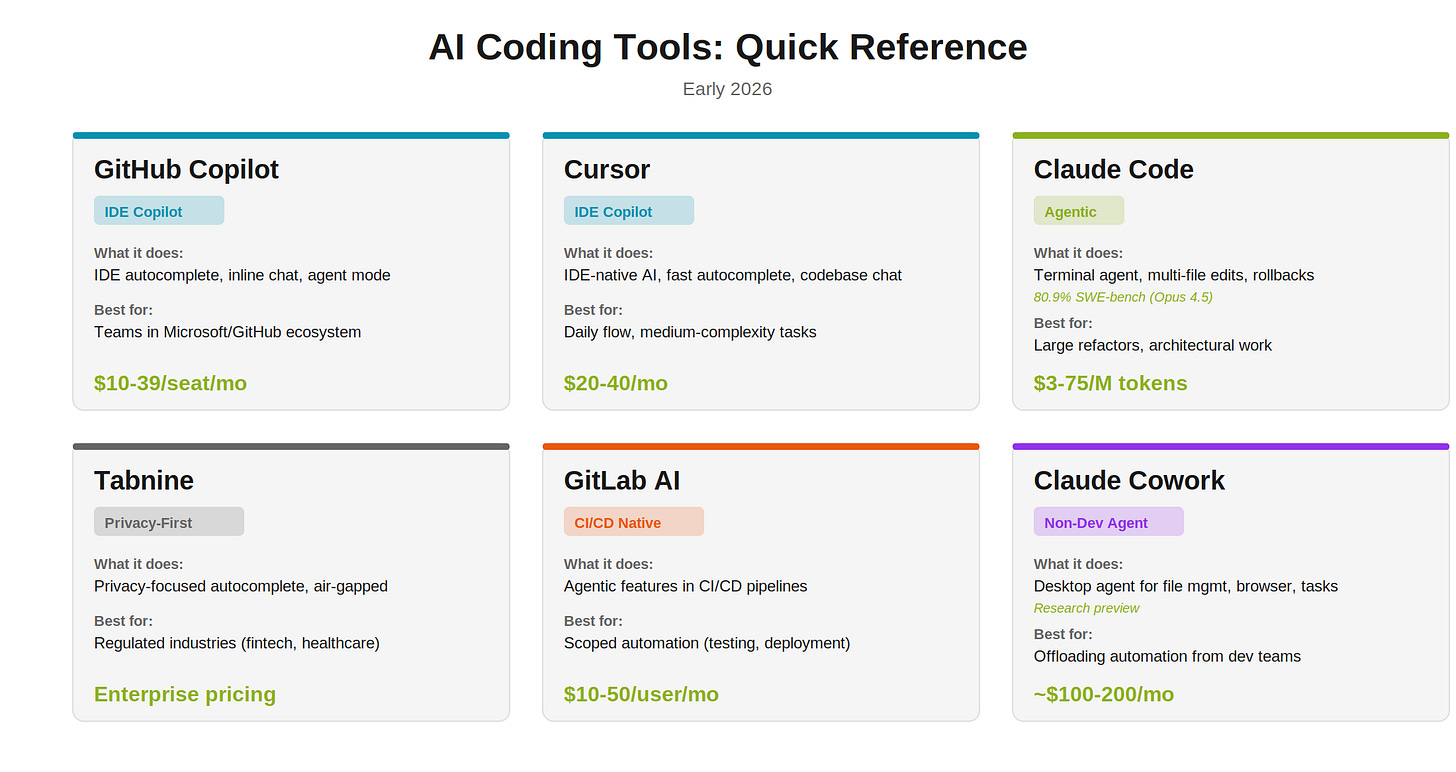

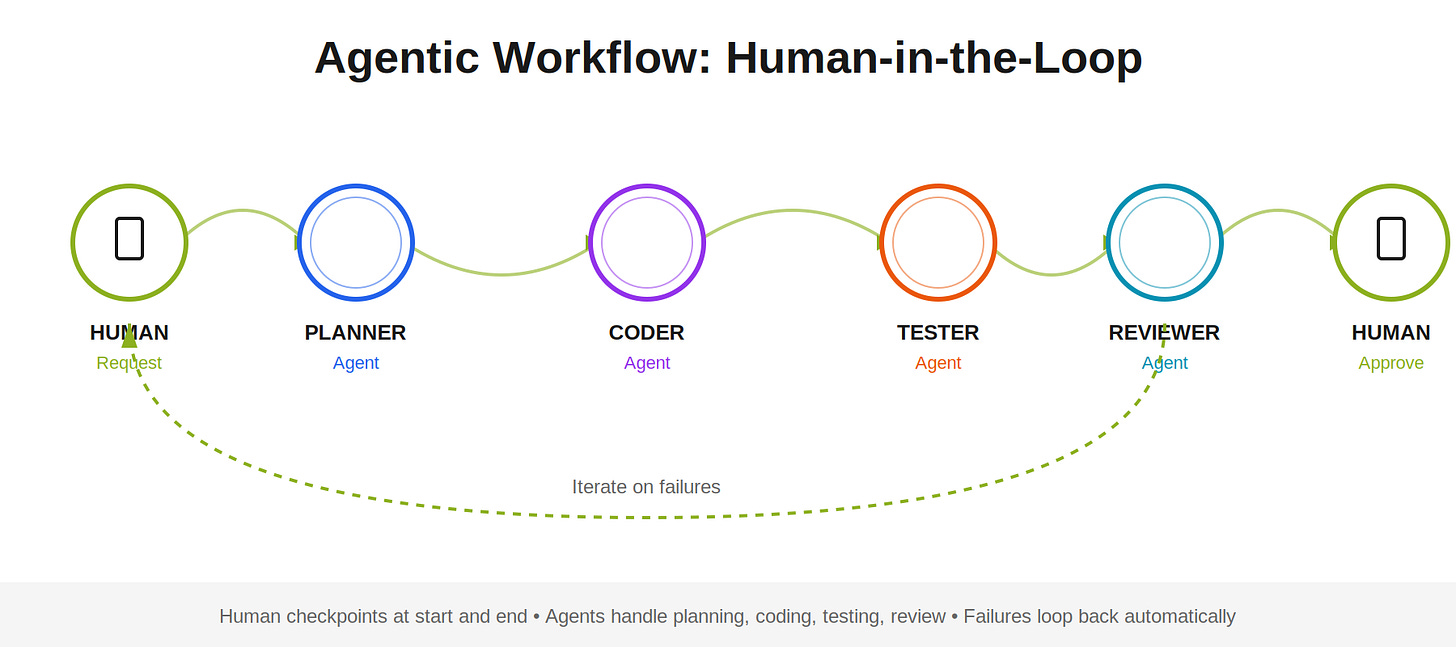

The market has split into two categories: IDE-first copilots that augment your editor line by line, and agentic systems that plan and execute multi-step changes with human checkpoints. Different problems, different tools.

Choosing which is best in your context is simpler than you may think. For daily flow (small tasks, quick completions, staying in the editor), you want a copilot like Cursor or GitHub Copilot. For complex work (large refactors, multi-file changes, architectural migrations), you want an agentic tool like Claude Code that can read your entire repo and execute a plan across dozens of files.

Don’t standardize on one tool. Smart teams use different tools for different jobs. The pattern I’m seeing work: Copilot for daily flow and peer reviews, Claude Code for planned refactors and migrations, Cursor for deep codebase work.

If your organization has strict data privacy requirements (fintech, healthcare, defense), tools like Tabnine offer air-gapped deployment. If you want agentic capabilities baked into CI/CD, GitLab AI and Apiiro are worth evaluating. If you have non-developers drowning in requests for simple automations, Claude Cowork (research preview, requires Claude Max subscription) can offload that demand to business teams directly.

Don’t chase features. Switching tools every month means you never build muscle memory or prompt engineering skills. Each switch resets your learning curve. Commit to one tool for 60-90 days. Learn its patterns. Evaluate alternatives after you’re proficient.

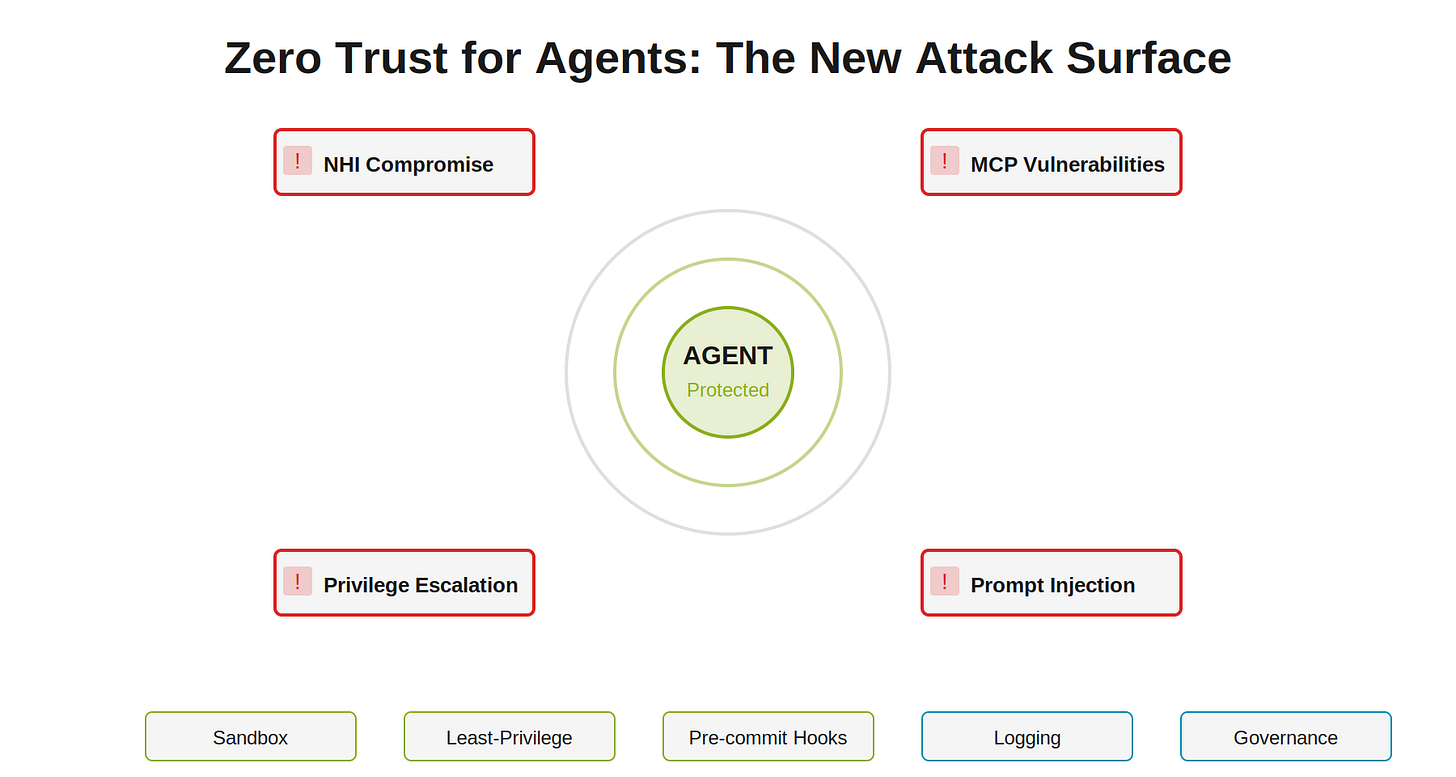

Security

This is where orgs suffer the most anxiety. That anxiety can lead to missteps. They treat agentic AI like another dev tool when it’s actually a new attack surface. Generative AI creates content. Agentic AI executes commands. Different beast.

Compromised agents mean data leaks or malicious behavior. Dependency risks are huge: outdated libraries, unvetted code.

The threat landscape includes prompt injection (adversaries force agents to execute malicious commands), cross-agent privilege escalation (low-privilege agents manipulated to trick high-privilege agents), non-human identity compromise (hardcoded API keys giving attackers months of access), and MCP server vulnerabilities (shadow agents linking LLMs to corporate databases without oversight).

Mitigation: Zero Trust for Agents. Every tool call and API request verified, logged, scoped. Think of agents as digital insiders. They can cause harm unintentionally or deliberately if compromised. Establish governance: ownership, monitoring tied to KPIs, escalation triggers, accountability standards. Treat LLM-generated code as untrusted. Sandbox everything. Enforce pre-commit hooks, automated scans. Least-privilege, no blanket access.

Code Quality and Maintainability

AI-generated code quality varies wildly. Some companies see huge gains, others see nothing. The difference is process discipline.

The “vibe coding” backlash is something you can’t ignore. There’s an “AI slop” crisis in production codebases and it is creating problems greater than any low-cost offshore dev shop of the early 2000s. One researcher documented a junior engineer merging 1,000 lines of AI-generated code that broke a test environment. The code was so convoluted that rewriting from scratch was faster than debugging.

Treat AI code like contractor code. Review it like it came from someone who doesn’t know your codebase. AI generates plausible code. It doesn’t understand why your team made specific architectural decisions.

Automate testing. Non-negotiable. The tools can run tests and iterate. Claude Code can run your test suite and fix failures before presenting code for review.

Don’t skip planning. Make the agent generate an architectural plan and review it before code gets written. This will catch misunderstandings before they become debt.

Watch for over-engineering. Agents go too far. One team reported Claude building a database-backed session management system when JWTs would’ve sufficed. Review for simplicity, not just correctness.

Studies suggest agent-generated code can spike complexity significantly and increase static warnings. Documentation matters more than ever. Humans carry mental context about why decisions were made. AI doesn’t. Require inline documentation explaining the reasoning, not just the what.

A Framework for Scaling

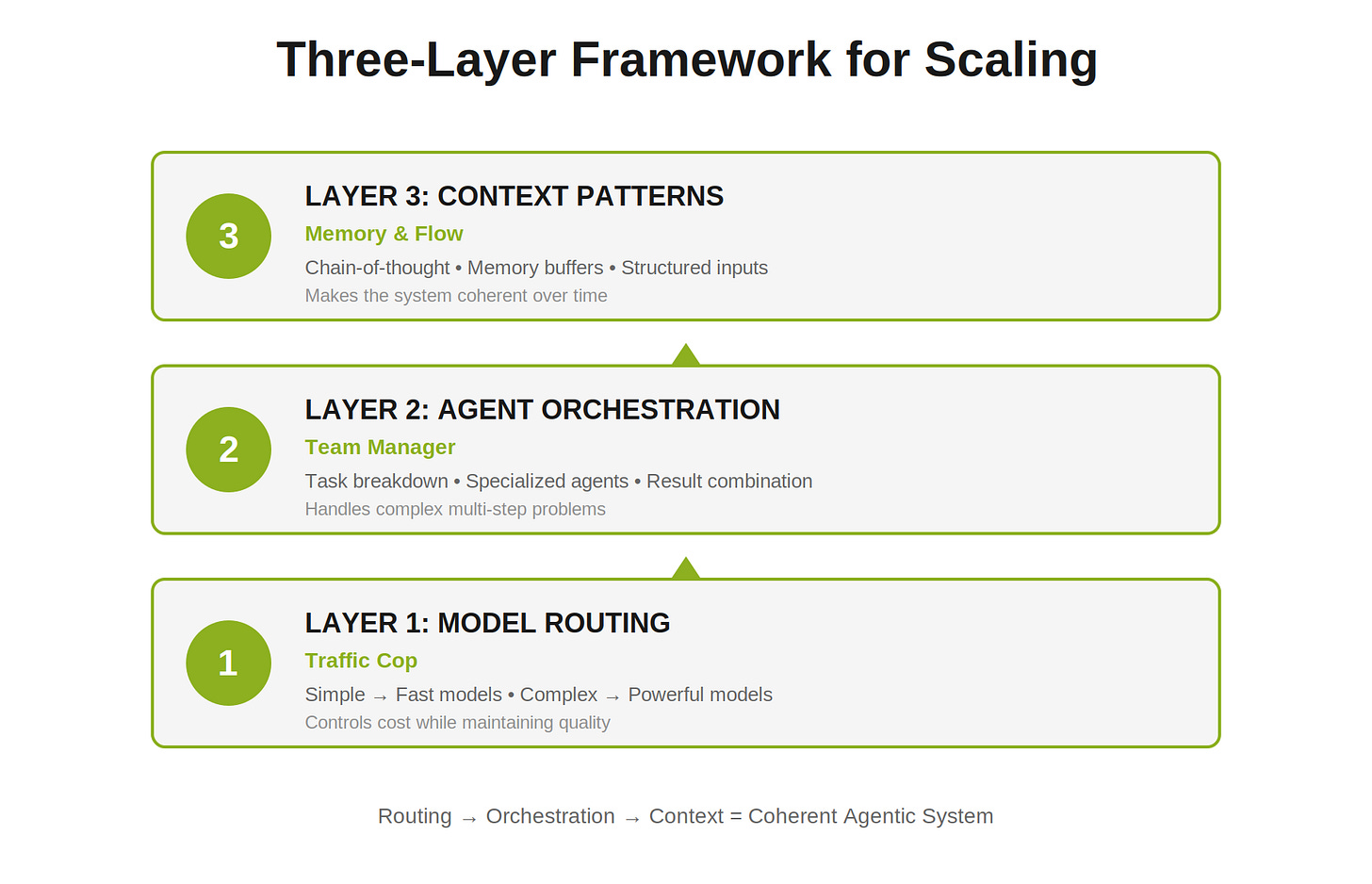

Once you move past pilots into production agentic systems, you need structure. A three-layer framework helps:

Layer 1: Model Routing (The Traffic Cop). Not every query needs your most expensive model. Route simple questions to fast, cheap models; route complex reasoning to powerful ones. This is how you control costs while maintaining quality. Without routing, you’re either overpaying or getting bad results.

Layer 2: Agent Orchestration (The Team Manager). Some tasks are too big for one agent. Orchestration breaks them down, assigns parts to specialized agents, and combines the results. One agent searches, another calculates, a third summarizes. The orchestrator keeps it cohesive. This is how you handle complex, multi-step problems.

Layer 3: Context Patterns (Memory and Flow). Agents forget. Context patterns are reusable templates for handling memory, conversation history, and reasoning flow. Chain-of-thought prompting. Memory buffers for key facts. Structured inputs that guide better outputs. This is how you make the system feel coherent over time instead of starting fresh every interaction.

The layers stack: routing picks the right tools, orchestration assembles them into a team, context patterns make the whole thing coherent. Teams using this framework report significant reductions in refactoring time.

The Cost

Your API bill is just the tip of the iceberg. I learned this the hard way running experiments in my office. What started as a few hundred bucks a month in API calls turned into weeks of engineering time spent optimizing prompts, building infrastructure that could actually scale, navigating security compliance reviews that nobody budgeted for, and standing up monitoring stacks to figure out why things were breaking. That “reasonable” API bill became unreasonable fast. DX research and industry reports consistently show total costs running 5-10x higher than what shows up on the invoice.

The cost iceberg: Your 200-token demo becomes a 1,200-token production interaction once you add conversation history, user profiles, and system state. Demo failures become complex recovery workflows. Your token math assumes perfect inputs; production assumes everything breaks. Your systems weren’t built for AI, and AI wasn’t built for your systems. “Simple” integrations turn into building APIs that don’t exist.

Subscription tools (Copilot, Cursor, Windsurf) run $10-40/seat/mo. Predictable. API tools spiral fast: an agent on complex tasks can consume 5-10M tokens monthly, costing $45-150/mo for Sonnet on a single workflow. Multiply across a team and things can get expensive fast.

Optimization strategies: Smart prompting can cut token usage significantly (PromptLayer research shows 40%+ reductions with concise prompts). Model routing (cheap models for simple tasks) can save up to 85% on certain workloads according to LMSYS benchmarks. Caching reduces redundant calls. Open-source frameworks (LangChain, LlamaIndex) lower costs vs proprietary options but require more expertise.

For 10-20 developers, budget $200-800/dev/mo in subscriptions, with total business cost at 5-10x that when you add integration, training, and maintenance. Enterprise implementation runs $50K-200K for rollout.

The economics still work. A skilled US developer costs $100-150/hr fully loaded (salary plus benefits, taxes, overhead). A $2K/mo AI investment delivering 3x on routine tasks pays for itself quickly.

Risks

Agentic AI isn’t perfect. Without oversight, agents spit out bad code that takes longer to fix, or nuke your production DB. (Looking at you, Replit horror stories.) Stack Overflow’s 2025 Survey found only 3% of developers “highly trust” AI output, while 46% actively distrust it. Maintainability is a top concern: DX research shows code quality impacts vary wildly by organization.

Hallucinations and errors: agents “vibe code” without understanding, creating vulnerabilities. Over-reliance degrades dev skills. Diminishing returns if your team’s already saturated with AI IDEs. Accountability shifts to humans overseeing agents. Track provenance or you’re the one holding the bag.

Treat it like buying a used sauna on Facebook Marketplace. Great deal if it works. Inspect it first.

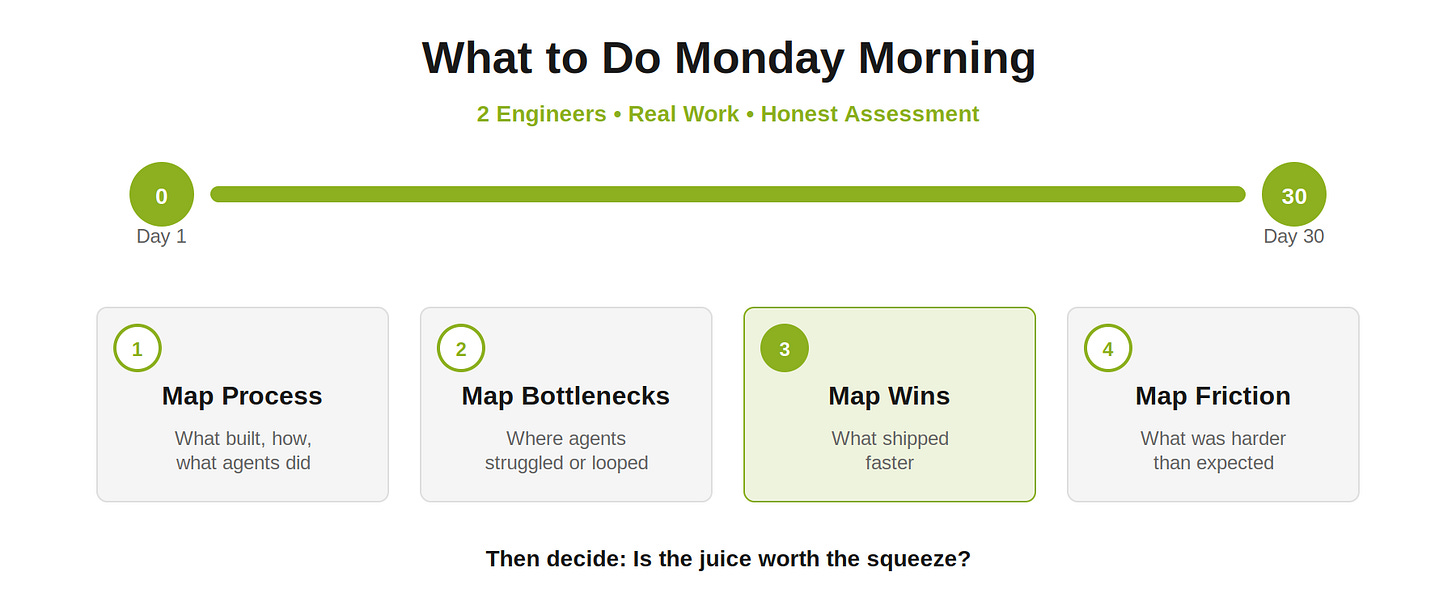

What to Do Monday Morning

Build a team of two engineers. Give them 30 days to see what an agentic team can deliver within your organization’s architecture.

Map the process: what did they build, how did they build it, what did the agents actually do?

Map the bottlenecks: where did agents struggle, loop, or produce garbage?

Map the wins: what shipped faster than it would have otherwise?

Map the friction: what was harder than expected, what required workarounds, what annoyed the team?

After 30 days, look at the results. Consider improving the outlined friction. Decide if the juice is worth the squeeze.

One caveat: if your value streams, business architecture, or technical architecture are already a mess, fix that first. AI doesn’t fix broken systems. It automates them. You’ll just experience the same pain you already have, faster and more often.

That’s it. Not a massive transformation initiative. Not a tool procurement process. Two engineers, 30 days, and an honest assessment.

Closing

Agentic AI is a fundamental economic shift in software development. Not a productivity tool. The orgs capturing value treat this as an operating model change, not a tool purchase.

The cost of not leveraging this is greater than you think. Ask why your competitors are adopting it, and what you’re missing.

The era of “magic” AI coding is over. The era of managed, verified, economically rational AI engineering has begun.

If you would like help designing and running your agentic-AI experiment, or scaling throughout your organization, reach out to Adam at adam@adammattis.com.

──────────────────────────────────────

Got war stories? @AdamMattis13

Sources

METR Study: “Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity” (arXiv:2507.09089, July 2025). metr.org

Stack Overflow: 2025 Developer Survey. survey.stackoverflow.co/2025

Google DORA: 2025 State of AI-assisted Software Development Report. cloud.google.com/devops

Anthropic: Claude Opus 4.5 System Card and SWE-bench Verified Results (November 2025). anthropic.com

DX: AI-assisted Engineering: Q4 Impact Report (2025). getdx.com

LMSYS/RouteLLM: Model routing research (ICLR 2025). github.com/lm-sys/RouteLLM

PromptLayer: “How to Reduce LLM Costs” (2024). blog.promptlayer.com